🟡 LLMs Using Tools

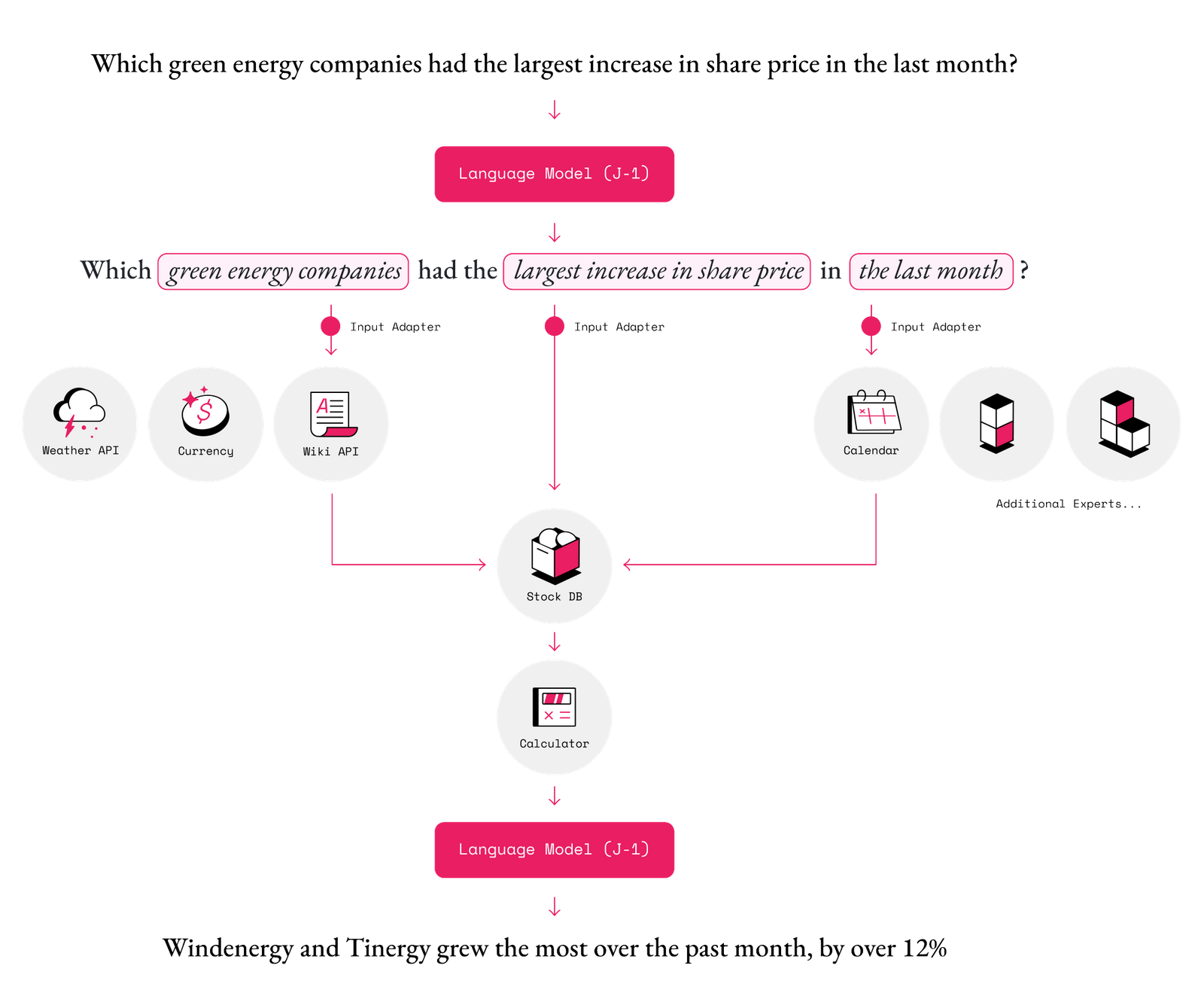

MRKL Systems1 (Modular Reasoning, Knowledge and Language, pronounced "miracle") are a neuro-symbolic architecture that combine LLMs (neural computation) and external tools like calculators (symbolic computation), to solve complex problems.

A MRKL system is composed of a set of modules (e.g. a calculator, weather API, database, etc.) and a router that decides how to 'route' incoming natural language queries to the appropriate module.

A simple example of a MRKL system is a LLM that can

use a calculator app. This is a single module system, where the LLM is the router.

When asked, What is 100*100?, the LLM can choose to

extract the numbers from the prompt, and then tell the MRKL System to use a calculator

app to compute the result. This might look like the following:

What is 100*100?

CALCULATOR[100*100]The MRKL system would see the word CALCULATOR and plug 100*100 into the calculator app.

This simple idea can easily be expanded to various symbolic computing tools.

Consider the following additional examples of applications:

- A chatbot that is able to respond to questions about a financial database by extracting information to form a SQL query from a users' text.

What is the price of Apple stock right now?

The current price is DATABASE[SELECT price FROM stock WHERE company = "Apple" AND time = "now"].- A chatbot that is able to respond to questions about the weather by extracting information from the prompt and using a weather API to retrieve the information.

What is the weather like in New York?

The weather is WEATHER_API[New York].- Or even much more complex tasks that depend on multiple datasources, such as the following:

An Example

I have reproduced an example MRKL System from the original paper, using Dust.tt,

linked here.

The system reads a math problem (e.g. What is 20 times 5^6?), extracts the numbers and the operations,

and reformats them for a calculator app (e.g. 20*5^6). It then sends the reformatted equation

to Google's calculator app, and returns the result. Note that the original paper performs prompt tuning on the router (the LLM), but I do not in this example. Let's walk through how this works:

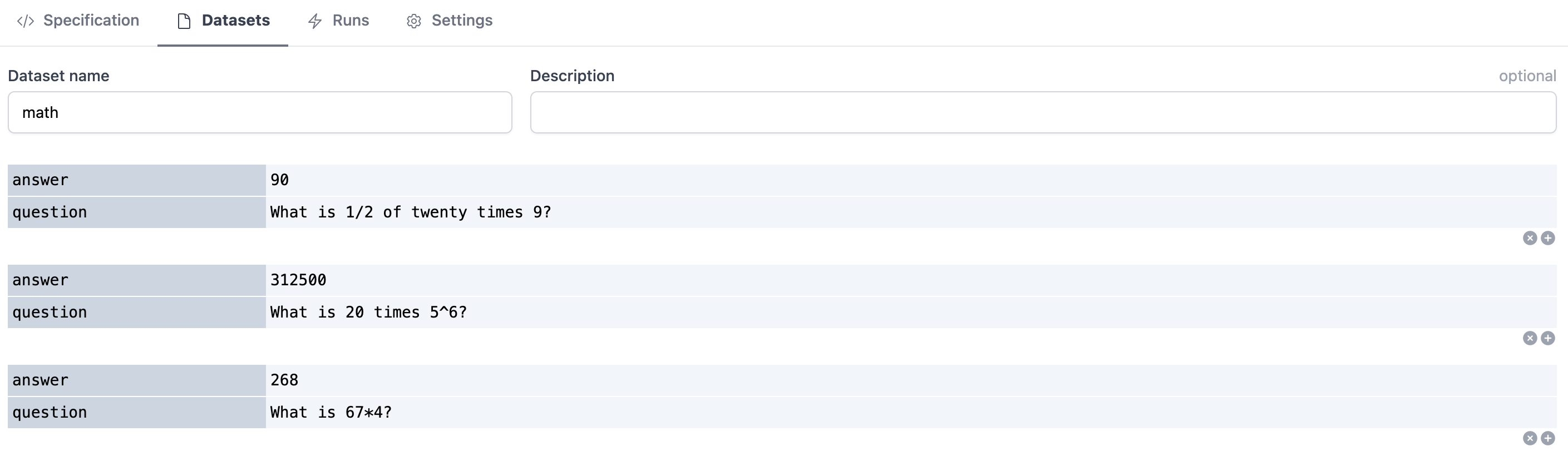

First, I made a simple dataset in the Dust Datasets tab.

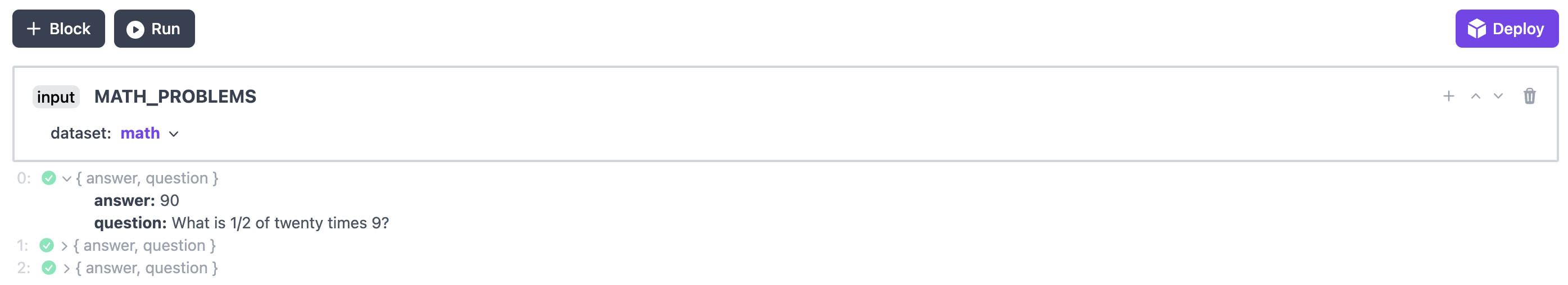

Then, I switched to the Specification tab and loaded the dataset using a data block.

Next, I created a llm block that extracts the numbers and operations. Notice how

in the prompt I told it we would be using Google's calculator. The model I use (GPT-3)

likely has some knowledge of Google's calculator from pretraining.

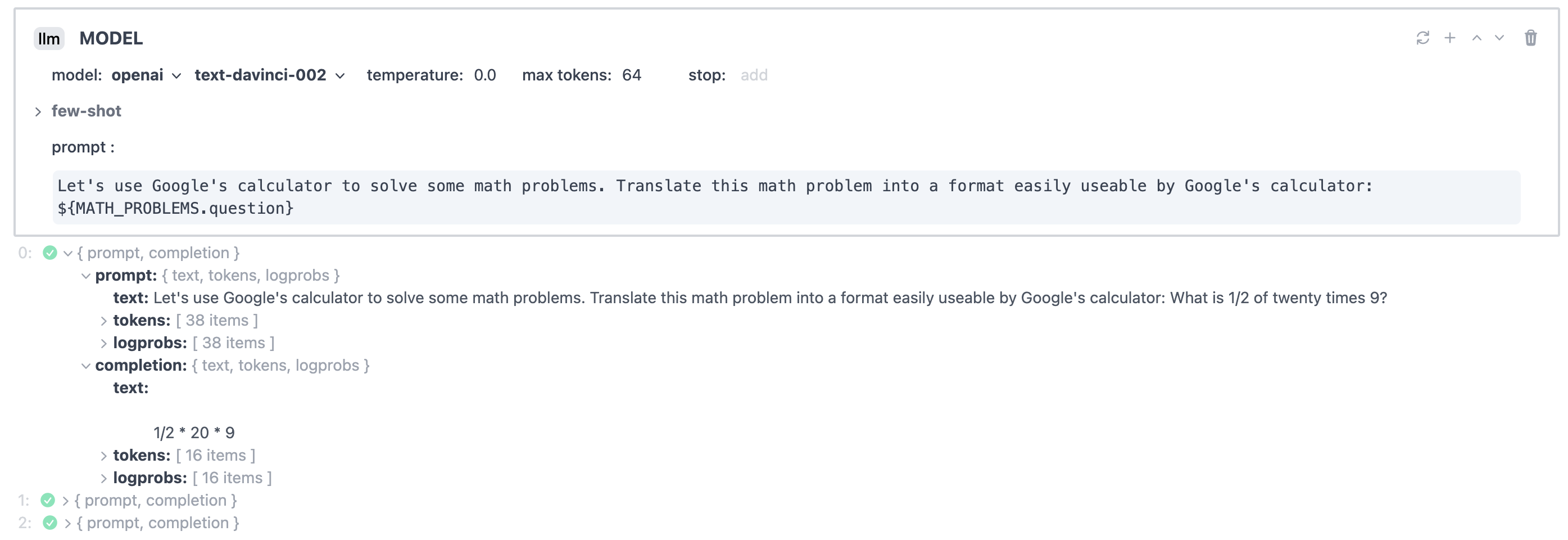

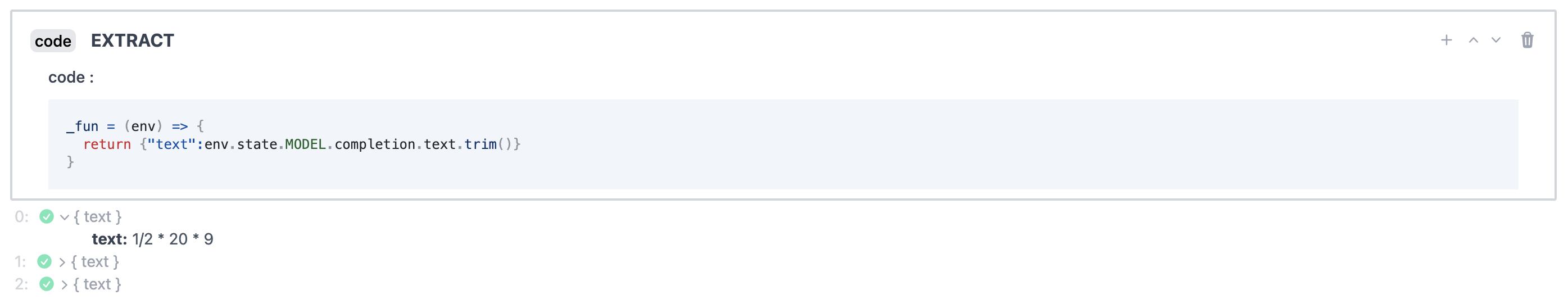

Then, I made a code block, which runs some simple javascript code to remove

spaces from the completion.

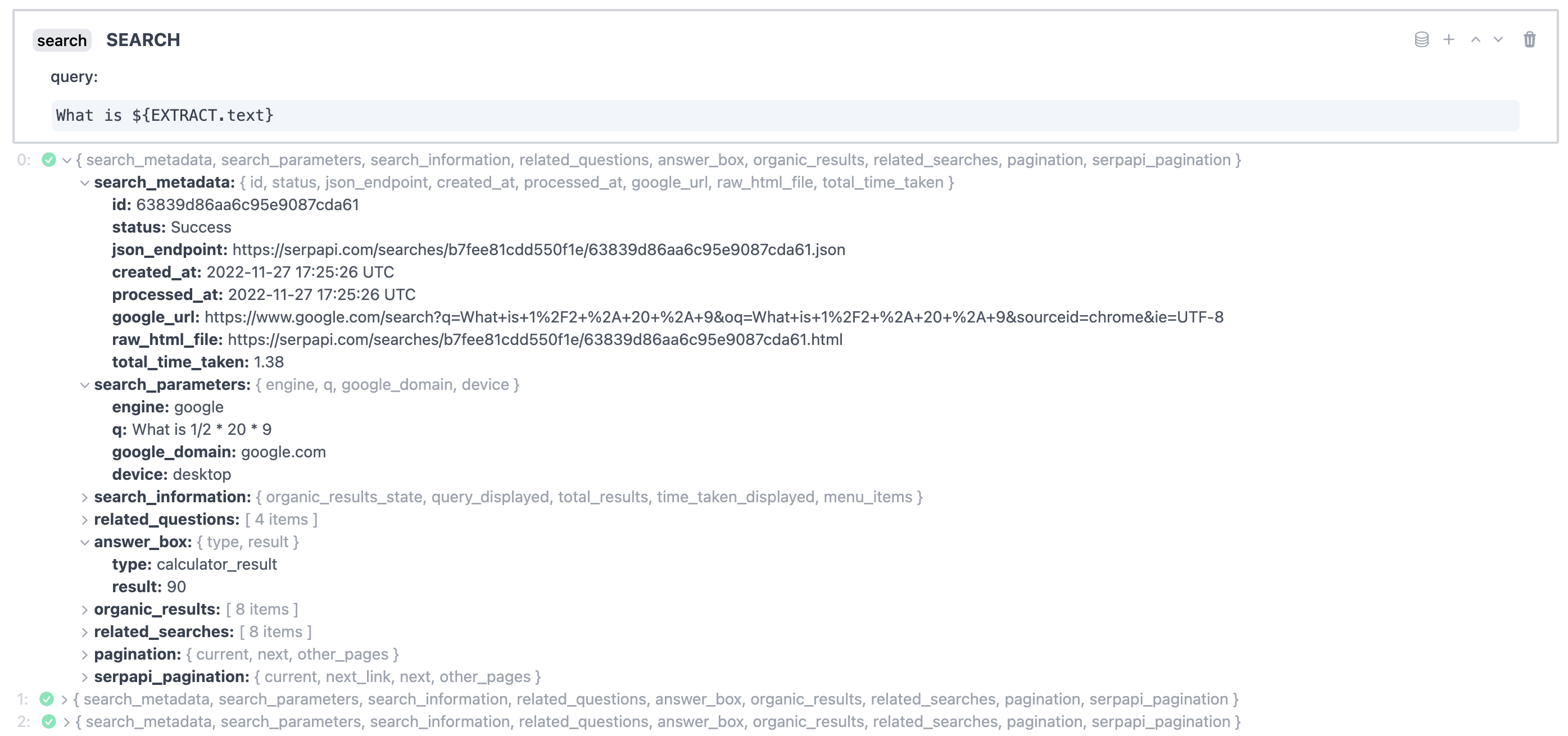

Finally, I made a search block that sends the reformatted equation to Google's calculator.

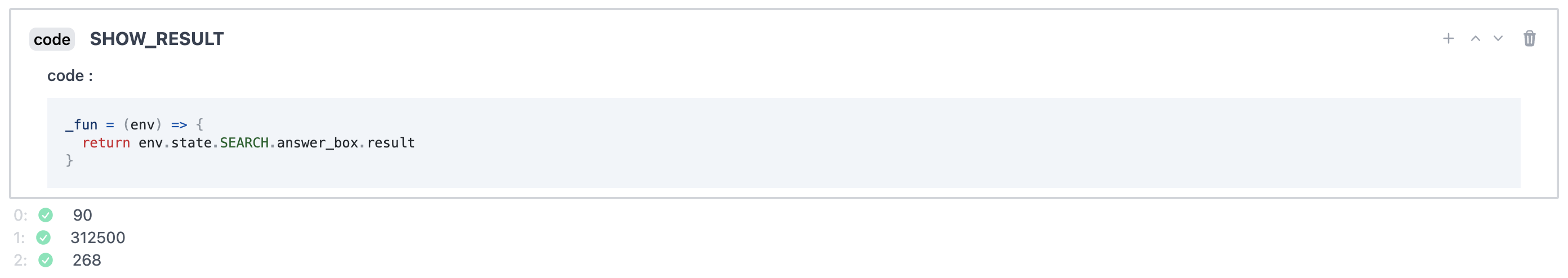

Below we can see the final results, which are all correct!

Feel free to clone and experiment with this playground here.

Notes

MRKL was developed by AI21 and originally used their J-1 (Jurassic 1)2 LLM.

More

See this example of a MRKL System built with LangChain.

- Karpas, E., Abend, O., Belinkov, Y., Lenz, B., Lieber, O., Ratner, N., Shoham, Y., Bata, H., Levine, Y., Leyton-Brown, K., Muhlgay, D., Rozen, N., Schwartz, E., Shachaf, G., Shalev-Shwartz, S., Shashua, A., & Tenenholtz, M. (2022). MRKL Systems: A modular, neuro-symbolic architecture that combines large language models, external knowledge sources and discrete reasoning. ↩

- Lieber, O., Sharir, O., Lentz, B., & Shoham, Y. (2021). Jurassic-1: Technical Details and Evaluation, White paper, AI21 Labs, 2021. URL: Https://Uploads-Ssl. Webflow. Com/60fd4503684b466578c0d307/61138924626a6981ee09caf6_jurassic_ Tech_paper. Pdf. ↩